One of the essential skillset in understanding Object Oriented concepts is getting the entities and the relationships among them correct, that make up the domain model. The domain model is the real world representation of the problem area that is required to be solved with software development. The modeling of such a domain model can be done using a variety of modeling techniques, dominant of which these days is the Unified Modeling Language or UML

The traditional approach to inheritance has been to define a superclass and a sub class approach. An example of that would be that of Car being a general class embodying all the common attributes and behavior of a car, and a specific car segment such as sedan, sports car, wagon etc. being a subclass inheriting from the Car class. But in real world relationships among entities are much more complicated than that. As object oriented paradigm matured, object oriented languages also advanced and gave us a lot more fundamental structures that supported abstraction and realization of relationships that did not fit well into the classical inheritance model.

OK, so time to get to the gist of the matter. The following are the principle relationships that can be used to cast a domain model and do programming against:

OK, so time to get to the gist of the matter. The following are the principle relationships that can be used to cast a domain model and do programming against:

1. Generalization relationship

2. Dependency relationship

3. Realization relationship

4. Association relationship

a) Aggregation relationship

b) Composition relationship

A complementary concept that goes hand in hand with the relationships is the concept of multiplicity, explained later in this post.

1. Generalization Relationship

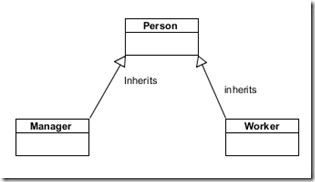

A generalization relationship is a parent class relationship, embodying the classical OOP concept of traditional inheritance. The relationship emphasizes a “is-a” relationship, and the shown with the help of a solid line with a hollow arrow going from the from the derived class and pointing to the parent class.

Example: A manager and a worker both are person on a higher abstract level, hence both Manager and Worker classes can be thought to derive from the Employee class.

Figure 1 Generalization relationship

2. Dependency Relationship:

As the name suggests, dependency relationship suggests a dependency of an entity over another. Dependency relationship is also called a client-supplier relationship, in which the client depends upon the supplier for accomplishing something. One important defining characteristic of this kind of relationship is that if the supplier changes in a way, then it affects client in some way. In UML, dependency relationship is shown by a dashed line with an arrow on one end, going from client and pointing to the supplier.

![clip_image001[5] clip_image001[5]](http://www.thinkingcog.com/image.axd?picture=clip_image001%5B5%5D_thumb.png)

Figure 2 Dependency Relationship

Let’s take an example: I am a developer and I use a laptop to accomplish the objective of writing code. So it is a dependency relationship. If you change the laptop to a tablet, then it certainly affects me as it will have an impact on productivity.

![clip_image001[7] clip_image001[7]](http://www.thinkingcog.com/image.axd?picture=clip_image001%5B7%5D_thumb.png)

Figure 3 Dependency relationship between me and my computer

In terms if code, it will be the Me class using an object of computer in DevelopCode method.

public class Computer

{

…

}

public class Me

{

public object DevelopCode(Computer computer)

{

…

}

}

3. Realization Relationship:

This form of relationship is primarily used to model classes that implement any interface. In a way, it says that the objects of such classes realize the implemented interface(s). The relationship is depicted by joining the class and interface with a dashed line with a hollow arrow head pointing to the interface realized.

![clip_image001[1] clip_image001[1]](http://www.thinkingcog.com/image.axd?picture=clip_image001%5B1%5D_thumb.png)

Figure 4 Realization relationship

A practical example in the .Net world is the implementation of the IDisposable interface which needs the public method Dispose() implemented.

public class MyClass:IDisposable

{

public Dispose();

}

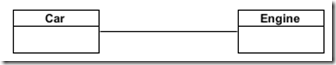

4. Association Relationship:

An association relationship signifies a stronger form of relationship between two entities. While generalization relationship signifies a “is-a” relationship, association relationship signifies a “has-a” relationship. This type of relationship comes into play when the relationship between two entities is more of containment than a superclass-subclass relationship. A car “has-a” engine (that “a” should have been “an”, but I just want to make my example clear without getting into English grammar rules). Similarly a library “has” books. A student “has-a” schedule.

Association relationship is shown by a solid line between the two entities sharing the relationship.

![clip_image001[3] clip_image001[3]](http://www.thinkingcog.com/image.axd?picture=clip_image001%5B3%5D_thumb.png)

Figure 5 Association relationship

Figure 6 A Car "has-a" engine

In code this strong relationship manifests in the form of a one entity using the object of the other entity as a class variable or property.

In real life, entities rarely stand all by themselves. They are complex, and they are complex because of their interaction with other entities. If you notice the examples mentioned for association relationship, then you might be thinking, that there are some scenarios in which an entity can have a scope which is dependent/independent of the other entity. An engine can be taken out of a car and fixed into another car, a book can be sold by a library if it does not find any patron for a long time and a schedule has no meaning without a student. In order to take this scenario into account, association relationship can be broken down into aggregation relationship and composition relationship, depending upon the fact whether the lifetime of one entity depends upon the lifetime of the other entity, which in turn is determined by the strength of relationship between the two entities. For example: A car engine can have a life outside of a car, but a schedule cannot have any meaning without a student.

4.1 Aggregation Relationship:

Aggregate relationship is a special form of association relationship. In this relationship, the lifetime of the individual entity is independent of the aggregate entity (complex object). In code, the individual entity’s class object is used as a variable or property in aggregate entity’s class code. Aggregation relationship, in UML is denoted by a solid line between the two entities, with a hollow diamond at one end attached to the aggregate entity.

![clip_image001[5] clip_image001[5]](http://www.thinkingcog.com/image.axd?picture=clip_image001%5B5%5D_thumb_1.png)

Figure 7 Aggregation relationship

![clip_image002[5] clip_image002[5]](http://www.thinkingcog.com/image.axd?picture=clip_image002%5B5%5D_thumb.png)

Figure 8 Car has an engine that can exists independently outside of the car

public class Engine

{

public int EngineCapacity {get; set;}

…

}

public class Car

{

public Engine Engine {get;set;}

}

4.2 Composition Relationship:

Composition relationship is a special form of association relationship in which the lifetime of the individual entity depends on the lifetime of the composited entity (complex object). In code, the individual entity is defined within the composited entity. Composition relationship, in UML is denoted by a solid line between two entities, with a solid diamond at one end attached to the composited entity.

![clip_image001[7] clip_image001[7]](http://www.thinkingcog.com/image.axd?picture=clip_image001%5B7%5D_thumb_1.png)

Figure 9 Composition relationship

![clip_image002[7] clip_image002[7]](http://www.thinkingcog.com/image.axd?picture=clip_image002%5B7%5D_thumb.png)

Figure 10 Book is made up of chapter(s)

public class Book

{

public class Chapter

{

public int NoOfPages {get;set;}

…

}

…

}

I hope this post will help clarify some of the concepts related to inheritance relationships in classes.

References:

1. http://www.uml-diagrams.org/

2. http://en.wikipedia.org

Diagrams made with Visual Paradigm for UML Community Edition

![clip_image001[11] clip_image001[11]](http://www.thinkingcog.com/image.axd?picture=clip_image001%5B11%5D_thumb.png)